Technology Considerations

We discuss some of the salient features of the KAT Sample Solution below. We highly recommend that you check out the latest detailed documentation on the BigBitBus Kubernetes Automation Toolkit open-source repository:

https://GitHub.com/BigBitBusInc/kubernetes-automation-toolkit

Deployment Components

Skaffold

An automated build tool for Kubernetes applications developed by Google. It handles the workflow for building, pushing, and deploying an application. This enables developers to focus more on developing instead of building and deploying. Skaffold deployments are based around a “skaffold.YAML” file which contains information such as the Docker image to use when building the container, path to the application, target environment to deploy it into, etc. Running this file will allow Skaffold to watch a local application directory for changes which, upon change, will automatically build, push, and deploy to a local or remote Kubernetes cluster. Read more here: https://skaffold.dev/docs/

In the scope of this sample, Skaffold allows us to develop the Django back-end and Vue.js front-end without having to use time on deployment configurations such as Kubernetes manifest files or rebuilding images. A quick “skaffold run” builds the application and deploys it within the Kubernetes cluster and tracks it for change.

Helm

A package manager for Kubernetes. It is essentially the equivalent of Yum/Apt for Kubernetes applications. Helm deploys and creates Helm Charts, which are structured packages that are built on top of Kubernetes architecture. Helm Charts create a simple, standard structure of Kubernetes manifests that are required for a deployment. After adding the chart to the local Helm repository, deployment to the Kubernetes cluster is then made simple with a quick “Helm install [Helm Chart]” command. Read more here: https://helm.sh/docs/

In the scope of this BoosterPack, Helm Charts were made for the back-end and front-end applications to conform to a structure that other components were using. Helm was also used to install the PostgreSQL database from Bitnami, the Prometheus Operator, and Grafana.

Responsible for monitoring the activity within a Kubernetes cluster. Developed by SoundCloud, Prometheus aims to create an all-in-one solution to visualize and log data that is being transferred within a cluster. Its key features include advanced data models for Kubernetes resources, a flexible query language, and multiple modes of graphing and dashboarding. Grafana is visualization software that allows users to better understand what is going on within a Kubernetes cluster. Grafana supplies data visualization in terms of graphs, charts, metrics, and maps which simplifies the overview of a complex cluster.

Grafana has built-in support for querying Prometheus. It takes the data that Prometheus picks up and display them in an organized manner to the user through a GUI. Through the GUI, the user can track the activity of resources within a given namespace in the target cluster.

Application

Vue.js Application

An open-source JavaScript framework that focuses on building UIs. It incorporates the reactive aspect of web design which connects the JavaScript to the HTML component of the code. If data change happens within the JavaScript component, the UI (HTML/CSS) automatically re-renders.

In the scope of this BoosterPack, the Vue.js application acts as a single-page front end. It is a GUI that simply allows users to make the standard RESTful API calls to the back-end without having to directly interact with it.

To make the API calls, the application uses a tool called “Axios” within the JavaScript library. It sets up the basic HTTP request format that is sent to the back-end server when a call is made.

| Method |

Description |

| GET |

When the page is loaded up, the application will automatically run a GET request which will retrieve any outstanding todos on the database. |

| POST |

A text box with the question “What needs to be done” will prompt the user to enter a todo. |

| PUT |

Double clicking on an outstanding todo will prompt the user with the ability to edit the todo. ESC will cancel the edit. |

| DELETE |

Hovering over the outstanding todo and clicking the red “x” will remove the todo from the list. |

The CSS used by our implementation was derived from this CSS template:

https://GitHub.com/Klerith/TODO-CSS-Template

The directory that holds all the Vue.js files is within the path:

https://GitHub.com/BigBitBusInc/kubernetes-automation-toolkit/tree/main/code/app-code/frontend/ToDo-vuejs

The underlying code that is shown to the user is further within src/App.vue. Here we see the basic HTML/CSS components stored within <template> tags and the JavaScript component stored within the <script> tags.

Read more about Vue.js: Introduction — Vue.js

Django Application (API back-end)

An open-source Python web framework that sets up a project’s fundamental components of a web application. This allows users to spend more time on development instead of deployment. The syntax is easy to understand and includes the core architecture of a web server.

In the scope of the BoosterPack, the Django application acts as the back end providing the todos API. It handles the API calls and sends/retrieves information from the database.

When the application starts up, it creates a model called “todo” that contains attributes such as the title and description of a single todo item. This is the core object that the application is based on. The application establishes a connection with the database and sends the model information through a database migration. The database will then create a table which stores these todo objects.

Using Django’s “DefaultRouter” as the router, the application automatically maps HTTP requests sent by the front-end against a queryset of all the todo objects through a “Viewset”. A “Viewset” uses model operations such as list, create, update, and destroy that change the contents of the database when the model objects are changed. For example, when a todo is added through the Vue.js application, the router takes the request, maps it according to its method and sends it to the “Viewset”, which handles the request using a “create” model operation. When the list of todo objects changes, the reactive aspect of the Vue.js application also updates its page.

We derived our todos API code from this original location: GitHub – wsvincent/drf-todo-api: Django REST Framework Todo API Tutorial

The directory that holds all the Django code files is within the path: kubernetes-automation-toolkit/code/app-code/api/todo-python-django at main · BigBitBusInc/kubernetes-automation-toolkit · GitHub

Read more about the Django REST framework: https://docs.djangoproject.com/en/3.1/

Other Considerations

Security

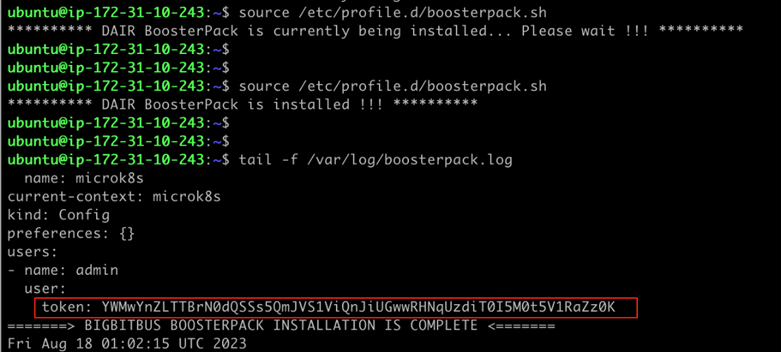

The DAIR VM running the Kubernetes cluster that hosts our todos application is only accessible on port 22 (SSH). We use secure tunnelling from our PC into this VM to explore the application and Grafana endpoints. We also patch the Ubuntu VM automatically when it is provisioned via the Application Blueprint.

All Helm Charts within this sample contain commented-out TLS/SSL code-blocks that can be activated once you obtain a valid TLS certificate for your domain. In the real world, you would not connect via a SSH tunnel but instead use TLS encryption to connect via the secure HTTPS protocol. The “values.YAML” files contain commented-out sections to use your own SSL certificates when it is time to deploy this for external user access. For more information on how to work with TLS and Ingress start here.

In the KAT Sample Solution, we have used the root-admin Kubernetes credentials and granted them to the user within the VM. In reality, for any production cluster where multiple users/services access Kubernetes, the best practice is to set up role-based access control. We encourage you to consider the many aspects of security before using Kubernetes in a production environment.

We have used Kubernetes secrets (for example, to store the Postgres password and pass it into our Helm Chart); it is recommended to use Kubernetes Secrets instead of plain text configmaps when sensitive data is stored in Kubernetes.

If you are interested in learning more about Kubernetes security, here are some excellent resources to get started:

- Kubernetes official documentation: Securing a Cluster https://kubernetes.io/docs/concepts/security/

- Automating Certificate Management in Cloud-Native Environments via Cert-Manager https://cert-manager.io/

Networking

As mentioned earlier, we use NGINX Ingress to expose the application endpoints to the outside world. Once the DAIR VM has been created, open an SSH tunnel on local port 8080 that connects to the HTTP port 80 on the DAIR VM.

After this, the user can access the application’s components via their PC web browser at these locations; please note that trailing forward-slashes are not optional!

Endpoints (after connecting via the SSH tunnel on localhost:8080)

| todos Front end |

http://localhost:8080/frontend/ |

| API Back end |

http://localhost:8080/djangoapi/ |

| Grafana Monitoring |

http://localhost:8080/monitoring-grafana/ |

| Kubernetes Dashboard |

http://localhost:8080/dashboard/ |

We use the NGINX Ingress Controller in the KAT Sample Solution. This opens up a ton of possibilities in configuring Layer-7 networking and routing requests. We are using the NGINX server as a reverse proxy and load balancer. NGINX is extremely versatile and comes with many options to shape your traffic.

Learn more about Kubernetes NGINX Ingress here.

Scaling

The “values.YAML” files in the Helm Charts for the front end and the back end have a parameter called “replicas” that can be adjusted for scaling the number of replicas. Additionally, the Kubernetes Horizontal Pod Autoscaler is enabled so that the number of replicas automatically scale depending on the CPU usage in the pods. Please refer to the “values.YAML” files in the Helm Charts to understand how these parameters are configured. You can experiment with setting the number of replicas in each of the deployments’ values.YAML file.

Availability

Kubernetes can create multiple pods running the same software – for example, our Django API back-end or Vue.js front-end – so that the application becomes highly available. This pattern is particularly important when using a multi-node Kubernetes cluster. For example, by running the todos application in a multi-node Kubernetes cluster, if one node fails, the application stays alive on the other node(s) and Kubernetes will automatically detect and restart the down node. This is transparent to the end-user of the application and greatly simplifies the engineering effort to operate and sustain applications in a highly available production environment.

Cost

The size of the Kubernetes cluster is usually proportional to the number of worker nodes in the cluster. For the KAT Sample Solution, we are simply using a single node cluster on a single virtual machine. In the real world, we have multiple control and worker nodes, as well as networking and load-balancing costs that can significantly grow as the cluster is scaled up. To get an idea of how much a “real-world” Kubernetes cluster can cost, we recommend using one of the public cloud cost calculators (see link below).

License

All components of the KAT Sample Solution are subject to open-source licenses; please refer to their respective source repositories to learn and read about the licensing terms. The KAT BoosterPack code and documentation license is available here:

https://GitHub.com/BigBitBusInc/kubernetes-automation-toolkit/blob/main/LICENSE.md

Source Code

The updated source code can be found here:

https://GitHub.com/BigBitBusInc/kubernetes-automation-toolkit

Glossary

The following terminology, as defined below, may be used throughout this document.

| Term |

Description |

Link/further Reading |

| Django |

Python-based free and open-source web framework |

|

| Helm |

A package manager for Kubernetes |

|

| Kubernetes |

An open-source container-orchestration system for automating computer application deployment, scaling, and management |

|

| Microk8s |

A lightweight, production-ready Kubernetes distribution |

|

| PostgresSQL |

An open-source relational database management system |

|

| Prometheus and Grafana |

Time series database and metrics querying system and UI |

|

| Skaffold |

A build and deploy tool for Kubernetes |

|

| Vue.js |

An open-source front end JavaScript framework for building user interfaces and single-page applications |

|